Scaling up: how increasing inputs has made artificial intelligence more capable

The path to recent advanced AI systems has been more about building larger systems than making scientific breakthroughs.

For most of Artificial Intelligence’s (AI’s) history, many researchers expected that building truly capable systems would need a long series of scientific breakthroughs: revolutionary algorithms, deep insights into human cognition, or fundamental advances in our understanding of the brain. While scientific advances have played a role, recent AI progress has revealed an unexpected insight: a lot of the recent improvement in AI capabilities has come simply from scaling up existing AI systems.1

Here, scaling means deploying more computational power, using larger datasets, and building bigger models. This approach has worked surprisingly well so far.2 Just a few years ago, state-of-the-art AI systems struggled with basic tasks like counting.34 Today, they can solve complex math problems, write software, create extremely realistic images and videos, and discuss academic topics.

This article will provide a brief overview of scaling in AI over the past years. The data comes from Epoch, an organization that analyzes trends in computing, data, and investments to understand where AI might be headed.5 Epoch maintains the most extensive dataset on AI models and regularly publishes key figures on AI growth and change.

What is scaling in AI models?

Let’s briefly break down what scaling means in AI. Scaling is about increasing three main things during training, which typically need to grow together:

- The amount of data used for training the AI;

- The model’s size, measured in “parameters”;

- Computational resources, often called "compute" in AI.

The idea is simple but powerful: bigger AI systems, trained on more data and using more computational resources, tend to perform better. Even without substantial changes to the algorithms, this approach often leads to better performance across many tasks.6

Here is another reason why this is important: as researchers scale up these AI systems, they not only improve in the tasks they were trained on but can sometimes lead them to develop new abilities that they did not have on a smaller scale.7 For example, language models initially struggled with simple arithmetic tests like three-digit addition, but larger models could handle these easily once they reached a certain size.8 The transition wasn't a smooth, incremental improvement but a more abrupt leap in capabilities.

This abrupt jump in capability, rather than steady improvement, can be concerning. If, for example, models suddenly develop unexpected and potentially harmful behaviors simply as a result of getting bigger, it would be harder to anticipate and control.

This makes tracking these metrics important.

What are the three components of scaling up AI models?

Data: scaling up the training data

One way to view today's AI models is by looking at them as very sophisticated pattern recognition systems. They work by identifying and learning from statistical regularities in the text, images, or other data on which they are trained. The more data the model has access to, the more it can learn about the nuances and complexities of the knowledge domain in which it’s designed to operate.9

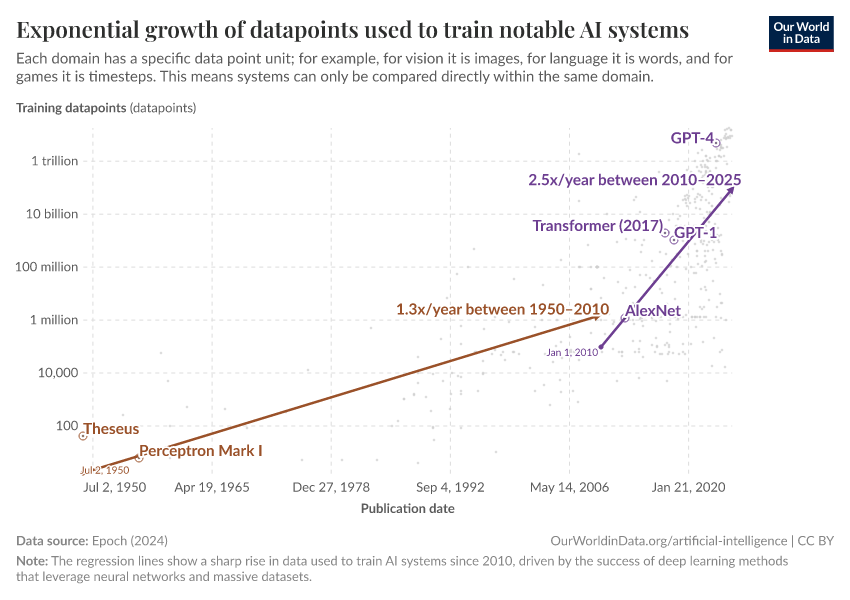

In 1950, Claude Shannon built one of the earliest examples of “AI”: a robotic mouse named Theseus that could "remember" its path through a maze using simple relay circuits. Each wall Theseus bumped into became a data point, allowing it to learn the correct route. The total number of walls or data points was 40. You can find this data point in the chart; it is the first one.

While Theseus stored simple binary states in relay circuits, modern AI systems utilize vast neural networks, which can learn much more complex patterns and relationships and thus process billions of data points.

All recent notable AI models — especially large, state-of-the-art ones — rely on vast amounts of training data. With the y-axis displayed on a logarithmic scale, the chart shows that the data used to train AI models has grown exponentially. From 40 data points for Theseus to trillions of data points for the largest modern systems in a little more than seven decades.

Since 2010, the training data has doubled approximately every nine to ten months. You can see this rapid growth in the chart, shown by the purple line extending from the start of 2010 to October 2024, the latest data point as I write this article.10

Datasets used for training large language models, in particular, have experienced an even faster growth rate, tripling in size each year since 2010. Large language models process text by breaking it into tokens — basic units the model can encode and understand. A token doesn't directly correspond to one word, but on average, three English words correspond to about four tokens.

GPT-2, released in 2019, is estimated to have been trained on 4 billion tokens, roughly equivalent to 3 billion words. To put this in perspective, as of September 2024, the English Wikipedia contained around 4.6 billion words.11 In comparison, GPT-4, released in 2023, was trained on almost 13 trillion tokens, or about 9.75 trillion words.12 This means that GPT-4’s training data was equivalent to over 2000 times the amount of text of the entire English Wikipedia.

As we use more data to train AI systems, we might eventually run out of high-quality human-generated materials like books, articles, and research papers. Some researchers predict we could exhaust useful training materials within the next few decades13. While AI models themselves can generate vast amounts of data, training AI on machine-generated materials could create problems, making the models less accurate and more repetitive.14

Parameters: scaling up the model size

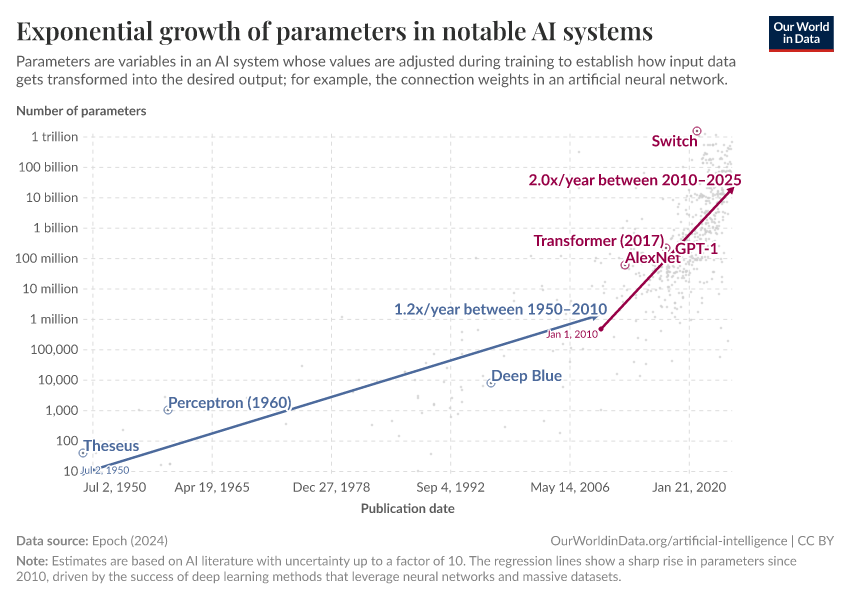

Increasing the amount of training data lets AI models learn from much more information than ever before. However, to pick up on the patterns in this data and learn effectively, models need what are called "parameters". Parameters are a bit like knobs that can be tweaked to improve how the model processes information and makes predictions. As the amount of training data grows, models need more capacity to capture all the details in the training data. This means larger datasets typically require the models to have more parameters to learn effectively.

Early neural networks had hundreds or thousands of parameters. With its simple maze-learning circuitry, Theseus was a model with just 40 parameters — equivalent to the number of walls it encountered. Recent large models, such as GPT-3, boast up to 175 billion parameters.15 While the raw number may seem large, this roughly translates into 700 GB if stored on a disc, which is easily manageable by today’s computers.

The chart shows how the number of parameters in AI models has skyrocketed over time. Since 2010, the number of AI model parameters has approximately doubled every year. The highest estimated number of parameters recorded by Epoch is 1.6 trillion in the QMoE model.

While bigger AI models can do more, they also face some problems. One major issue is called "overfitting". This happens when an AI becomes “too optimized” for processing the particular data it was trained on but struggles with new data. To combat this, researchers employ two strategies: implementing specialized techniques for more generalized learning and expanding the volume and diversity of training data.

Compute: scaling up computational resources

As AI models grow in data and parameters, they require exponentially more computational resources. These resources, commonly referred to as “compute” in AI research, are typically measured in total floating-point operations (“FLOP”), where each FLOP represents a single arithmetic calculation like addition or multiplication.

The computational needs for AI training have changed dramatically over time. With their modest data and parameter counts, early models could be trained in hours on simple hardware. Today’s most advanced models require hundreds of days of continuous computations, even with tens of thousands of special-purpose computers.

The chart shows that the computation used to train each AI model — shown on the vertical axis — has consistently and exponentially increased over the last few decades. From 1950 to 2010, compute doubled roughly every two years. However, since 2010, this growth has accelerated dramatically, now doubling approximately every six months, with the most compute-intensive model reaching 50 billion petaFLOP as I write this article.16

To put this scale in perspective, a single high-end graphics card like the NVIDIA GeForce RTX 3090 — widely used in AI research — running at full capacity for an entire year would complete just 1.1 million petaFLOP computations. 50 billion petaFLOP is approximately 45,455 times more than that.

Achieving computations on this scale requires large energy and hardware investments. Training some of the latest models has been estimated to cost up to $40 million, making it accessible only to a few well-funded organizations.

Compute, data, and parameters tend to scale at the same time

Compute, data, and parameters are closely interconnected when it comes to scaling AI models. When AI models are trained on more data, there are more things to learn. To deal with the increasing complexity of the data, AI models, therefore, require more parameters to learn from the various features of the data. Adding more parameters to the model means that it needs more computational resources during training.

This interdependence means that data, parameters, and compute need to grow simultaneously. Today’s largest public datasets are about ten times bigger than what most AI models currently use, some containing hundreds of trillions of words. But without enough compute and parameters, AI models can’t yet use these for training.

What can we learn from these trends for the future of AI?

Companies are seeking large financial investments to develop and scale their AI models, with a growing focus on generative AI technologies. At the same time, the key hardware that is used for training — GPUs — is getting much cheaper and more powerful, with its computing speed doubling roughly every 2.5 years per dollar spent.17 Some organizations are also now leveraging more computational resources not just in training AI models but also during inference — the phase when models generate responses — as illustrated by OpenAI's latest o1 model.

These developments could help create more sophisticated AI technologies faster and cheaper. As companies invest more money and the necessary hardware improves, we might see significant improvements in what AI can do, including potentially unexpected new capabilities.

Because these changes could have major effects on our society, it's important that we track and understand these developments early on. To support this, we will update key metrics — such as the growth in computational resources, training data volumes, and model parameters — on a monthly basis. These updates will help monitor the rapid evolution of AI technologies and provide valuable insights into their trajectory.

Acknowledgments

I’d like to thank Max Roser, Daniel Bachler, Charlie Giattino, and Edouard Mathieu for their helpful comments and ideas for this article and visualizations.

Endnotes

Vaswani et al. (2017). Attention is all you need. Advances in neural information processing systems, 30.

Hestness et al. (2017). Deep learning scaling is predictable, empirically. arXiv preprint arXiv:1712.00409.

According to some accounts, GPT-2, a state-of-the-art language model by OpenAI at the time, was unable to reliably count to ten.

Bengio et al. (2023). Managing AI risks in an era of rapid progress.arXiv preprint arXiv:2310.17688.

Epoch (2023), "Key Trends and Figures in Machine Learning". Published online at epochai.org. Retrieved from: 'https://epochai.org/trends' [online resource].

Hoffmann et al. (2022). Training compute-optimal large language models. arXiv preprint arXiv:2203.15556.; Kaplan et al. (2020). Scaling laws for neural language models. arXiv preprint arXiv:2001.08361.

Wei et al. (2022). Emergent abilities of large language models. arXiv preprint arXiv:2206.07682.

Some researchers argue that identifying new skills in AI largely hinges on the metrics used for evaluation. As a result, unless the model shows outstanding performance in a specific task, its developing abilities may remain unrecognized before they are “perfect”, giving the impression that these skills suddenly emerged.

For instance, language models like GPT (Generative Pre-trained Transformer) are trained on datasets consisting of billions of words, enabling them to understand and generate human-like text.

The regression line for 2010 onward highlights the rapid growth driven largely by the success of deep learning methods—an approach where artificial neural networks learn and improve by analyzing vast amounts of data to identify patterns and make predictions.

You can see the size of OpenAI's models in this chart.

Villalobos et al. (2024). ‘Will we run out of data? Limits of LLM scaling based on human-generated data’. ArXiv [cs.LG]. arXiv. https://arxiv.org/abs/2211.04325

Shumailov et al. (2024). AI models collapse when trained on recursively generated data. Nature 631, 755–759. https://doi.org/10.1038/s41586-024-07566-y/

Brown et al. (2020). Language models are few-shot learners. Advances in neural information processing systems, 33, 1877-1901.

One petaFLOP is equal to 1,000,000,000,000,000 (one quadrillion) FLOP.

Hobbhahn and Besiroglu (2022). Trends in GPU Price-Performance. Published online at epochai.org. Retrieved from: 'https://epochai.org/blog/trends-in-gpu-price-performance' [online resource]

Cite this work

Our articles and data visualizations rely on work from many different people and organizations. When citing this article, please also cite the underlying data sources. This article can be cited as:

Veronika Samborska (2025) - “Scaling up: how increasing inputs has made artificial intelligence more capable” Published online at OurWorldinData.org. Retrieved from: 'https://archive.ourworldindata.org/20260126-094752/scaling-up-ai.html' [Online Resource] (archived on January 26, 2026).BibTeX citation

@article{owid-scaling-up-ai,

author = {Veronika Samborska},

title = {Scaling up: how increasing inputs has made artificial intelligence more capable},

journal = {Our World in Data},

year = {2025},

note = {https://archive.ourworldindata.org/20260126-094752/scaling-up-ai.html}

}Reuse this work freely

All visualizations, data, and code produced by Our World in Data are completely open access under the Creative Commons BY license. You have the permission to use, distribute, and reproduce these in any medium, provided the source and authors are credited.

The data produced by third parties and made available by Our World in Data is subject to the license terms from the original third-party authors. We will always indicate the original source of the data in our documentation, so you should always check the license of any such third-party data before use and redistribution.

All of our charts can be embedded in any site.