Computation used to train notable artificial intelligence systems, by domain

What you should know about this indicator

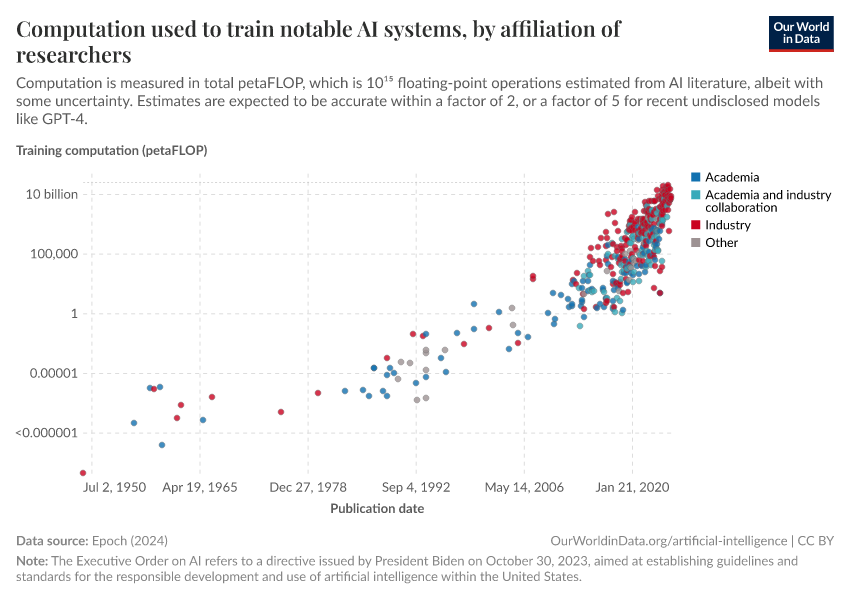

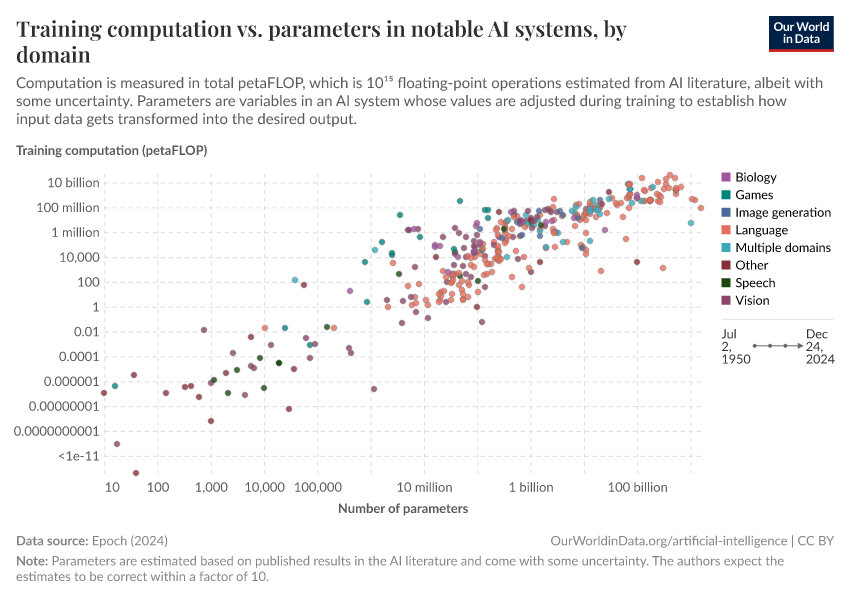

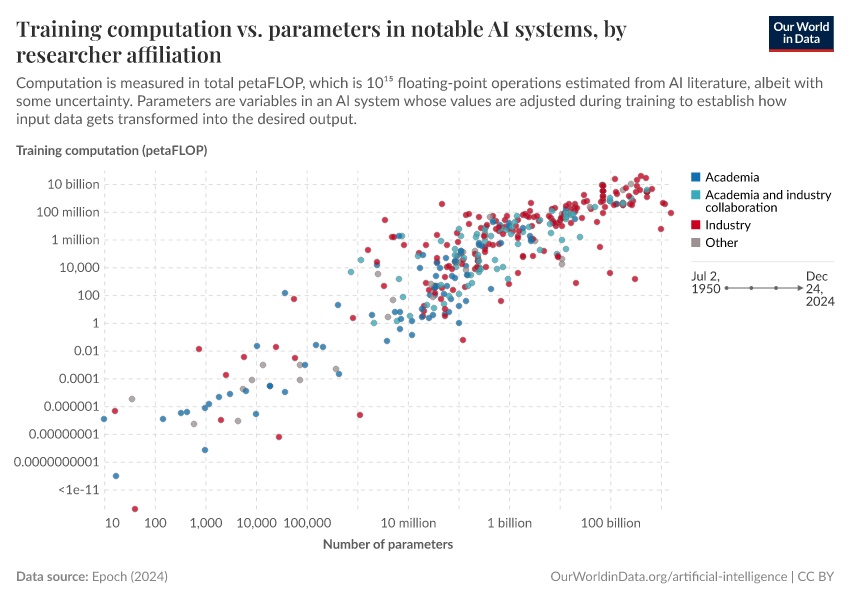

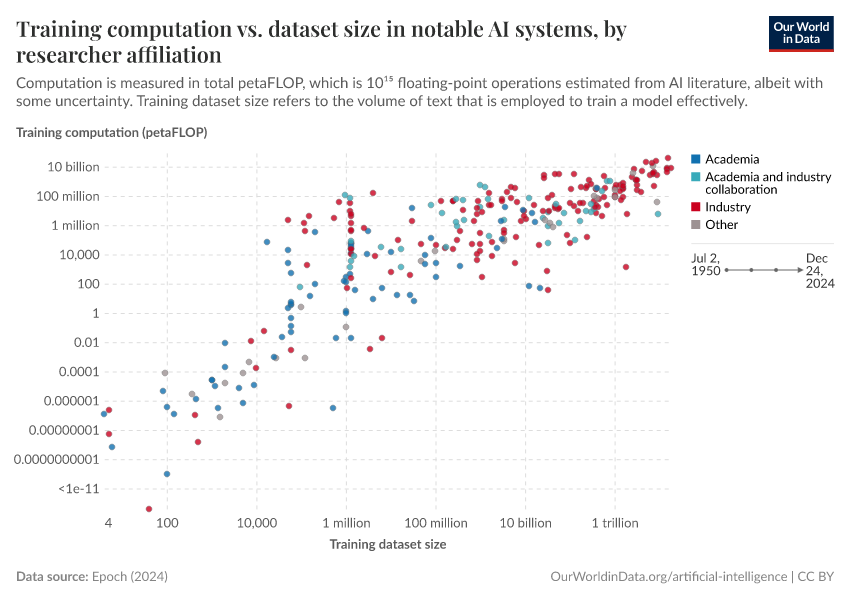

- In the context of artificial intelligence (AI), training computation is predominantly measured using floating-point operations or “FLOP”. One FLOP represents a single arithmetic operation involving floating-point numbers, such as addition, subtraction, multiplication, or division. To adapt to the vast computational demands of AI systems, the measurement unit of petaFLOP is commonly used. One petaFLOP stands as a staggering one quadrillion FLOPs, underscoring the magnitude of computational operations within AI.

- Modern AI systems are rooted in machine learning and deep learning techniques. These methodologies are notorious for their computational intensity, involving complex mathematical processes and algorithms. During the training phase, AI models process large volumes of data, while continuously adapting and refining their parameters to optimize performance, rendering the training process computationally intensive.

- Many factors influence the magnitude of training computation within AI systems. Notably, the size of the dataset employed for training significantly impacts the computational load. Larger datasets necessitate more processing power. The complexity of the model's architecture also plays a pivotal role; more intricate models lead to more computations. Parallel processing, involving the simultaneous use of multiple processors, also has a substantial effect. Beyond these factors, specific design choices and other variables further contribute to the complexity and scale of training computation within AI.

What you should know about this indicator

- In the context of artificial intelligence (AI), training computation is predominantly measured using floating-point operations or “FLOP”. One FLOP represents a single arithmetic operation involving floating-point numbers, such as addition, subtraction, multiplication, or division. To adapt to the vast computational demands of AI systems, the measurement unit of petaFLOP is commonly used. One petaFLOP stands as a staggering one quadrillion FLOPs, underscoring the magnitude of computational operations within AI.

- Modern AI systems are rooted in machine learning and deep learning techniques. These methodologies are notorious for their computational intensity, involving complex mathematical processes and algorithms. During the training phase, AI models process large volumes of data, while continuously adapting and refining their parameters to optimize performance, rendering the training process computationally intensive.

- Many factors influence the magnitude of training computation within AI systems. Notably, the size of the dataset employed for training significantly impacts the computational load. Larger datasets necessitate more processing power. The complexity of the model's architecture also plays a pivotal role; more intricate models lead to more computations. Parallel processing, involving the simultaneous use of multiple processors, also has a substantial effect. Beyond these factors, specific design choices and other variables further contribute to the complexity and scale of training computation within AI.

Sources and processing

This data is based on the following sources

How we process data at Our World in Data

All data and visualizations on Our World in Data rely on data sourced from one or several original data providers. Preparing this original data involves several processing steps. Depending on the data, this can include standardizing country names and world region definitions, converting units, calculating derived indicators such as per capita measures, as well as adding or adapting metadata such as the name or the description given to an indicator.

At the link below you can find a detailed description of the structure of our data pipeline, including links to all the code used to prepare data across Our World in Data.

Reuse this work

- All data produced by third-party providers and made available by Our World in Data are subject to the license terms from the original providers. Our work would not be possible without the data providers we rely on, so we ask you to always cite them appropriately (see below). This is crucial to allow data providers to continue doing their work, enhancing, maintaining and updating valuable data.

- All data, visualizations, and code produced by Our World in Data are completely open access under the Creative Commons BY license. You have the permission to use, distribute, and reproduce these in any medium, provided the source and authors are credited.

Citations

How to cite this page

To cite this page overall, including any descriptions, FAQs or explanations of the data authored by Our World in Data, please use the following citation:

“Data Page: Computation used to train notable artificial intelligence systems, by domain”, part of the following publication: Charlie Giattino, Edouard Mathieu, Veronika Samborska, and Max Roser (2023) - “Artificial Intelligence”. Data adapted from Epoch AI. Retrieved from https://archive.ourworldindata.org/20260304-094028/grapher/artificial-intelligence-training-computation.html [online resource] (archived on March 4, 2026).How to cite this data

In-line citationIf you have limited space (e.g. in data visualizations), you can use this abbreviated in-line citation:

Epoch AI (2025) – with major processing by Our World in DataFull citation

Epoch AI (2025) – with major processing by Our World in Data. “Computation used to train notable artificial intelligence systems, by domain” [dataset]. Epoch AI, “Parameter, Compute and Data Trends in Machine Learning” [original data]. Retrieved March 5, 2026 from https://archive.ourworldindata.org/20260304-094028/grapher/artificial-intelligence-training-computation.html (archived on March 4, 2026).