Datapoints used to train notable artificial intelligence systems

What you should know about this indicator

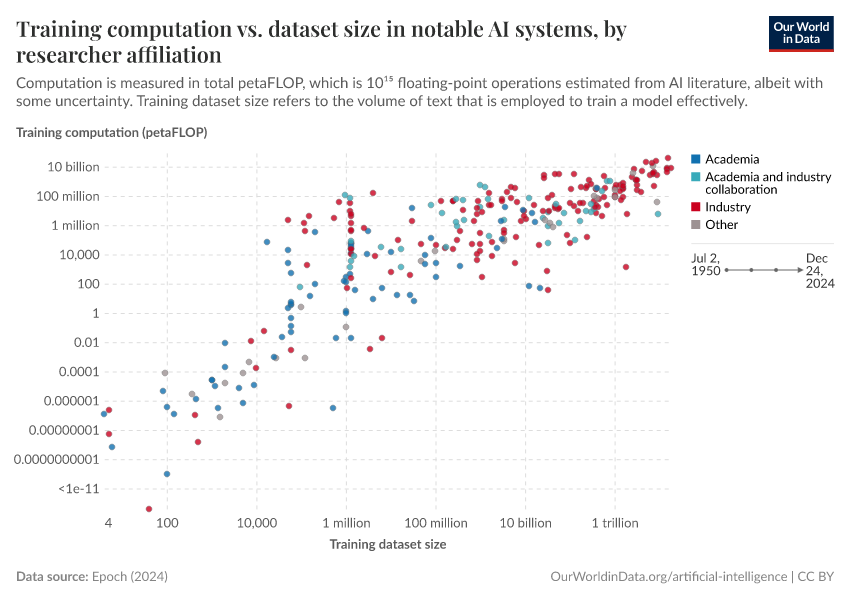

- Training data size measures the volume of unique examples used to train an AI model during its learning phase. It represents the total number of distinct data points the model learns from, counted only once regardless of how many times they're seen during training.

- To understand this concept, imagine teaching someone to identify different bird species. Each unique bird photo you show them is one piece of training data. If you show 100 different photos, your training data size is 100, even if you review those same photos multiple times.

- Since datasets vary by domain, there's no universal unit for measuring size. Text models might count tokens, image models count pictures, and video models count clips. Epoch AI typically uses the smallest unit that triggers a model update during training. For language models that predict the next word, this would be individual tokens.

- Training data size directly impacts model performance. Larger datasets enable deeper learning and more nuanced pattern recognition, allowing models to identify subtle distinctions and handle diverse real-world scenarios more effectively.

What you should know about this indicator

- Training data size measures the volume of unique examples used to train an AI model during its learning phase. It represents the total number of distinct data points the model learns from, counted only once regardless of how many times they're seen during training.

- To understand this concept, imagine teaching someone to identify different bird species. Each unique bird photo you show them is one piece of training data. If you show 100 different photos, your training data size is 100, even if you review those same photos multiple times.

- Since datasets vary by domain, there's no universal unit for measuring size. Text models might count tokens, image models count pictures, and video models count clips. Epoch AI typically uses the smallest unit that triggers a model update during training. For language models that predict the next word, this would be individual tokens.

- Training data size directly impacts model performance. Larger datasets enable deeper learning and more nuanced pattern recognition, allowing models to identify subtle distinctions and handle diverse real-world scenarios more effectively.

Sources and processing

This data is based on the following sources

How we process data at Our World in Data

All data and visualizations on Our World in Data rely on data sourced from one or several original data providers. Preparing this original data involves several processing steps. Depending on the data, this can include standardizing country names and world region definitions, converting units, calculating derived indicators such as per capita measures, as well as adding or adapting metadata such as the name or the description given to an indicator.

At the link below you can find a detailed description of the structure of our data pipeline, including links to all the code used to prepare data across Our World in Data.

Reuse this work

- All data produced by third-party providers and made available by Our World in Data are subject to the license terms from the original providers. Our work would not be possible without the data providers we rely on, so we ask you to always cite them appropriately (see below). This is crucial to allow data providers to continue doing their work, enhancing, maintaining and updating valuable data.

- All data, visualizations, and code produced by Our World in Data are completely open access under the Creative Commons BY license. You have the permission to use, distribute, and reproduce these in any medium, provided the source and authors are credited.

Citations

How to cite this page

To cite this page overall, including any descriptions, FAQs or explanations of the data authored by Our World in Data, please use the following citation:

“Data Page: Datapoints used to train notable artificial intelligence systems”, part of the following publication: Charlie Giattino, Edouard Mathieu, Veronika Samborska, and Max Roser (2023) - “Artificial Intelligence”. Data adapted from Epoch AI. Retrieved from https://archive.ourworldindata.org/20260126-094752/grapher/artificial-intelligence-number-training-datapoints.html [online resource] (archived on January 26, 2026).How to cite this data

In-line citationIf you have limited space (e.g. in data visualizations), you can use this abbreviated in-line citation:

Epoch AI (2025) – with major processing by Our World in DataFull citation

Epoch AI (2025) – with major processing by Our World in Data. “Datapoints used to train notable artificial intelligence systems” [dataset]. Epoch AI, “Parameter, Compute and Data Trends in Machine Learning” [original data]. Retrieved February 15, 2026 from https://archive.ourworldindata.org/20260126-094752/grapher/artificial-intelligence-number-training-datapoints.html (archived on January 26, 2026).