Share of FrontierMath problems solved correctly by AI models

What you should know about this indicator

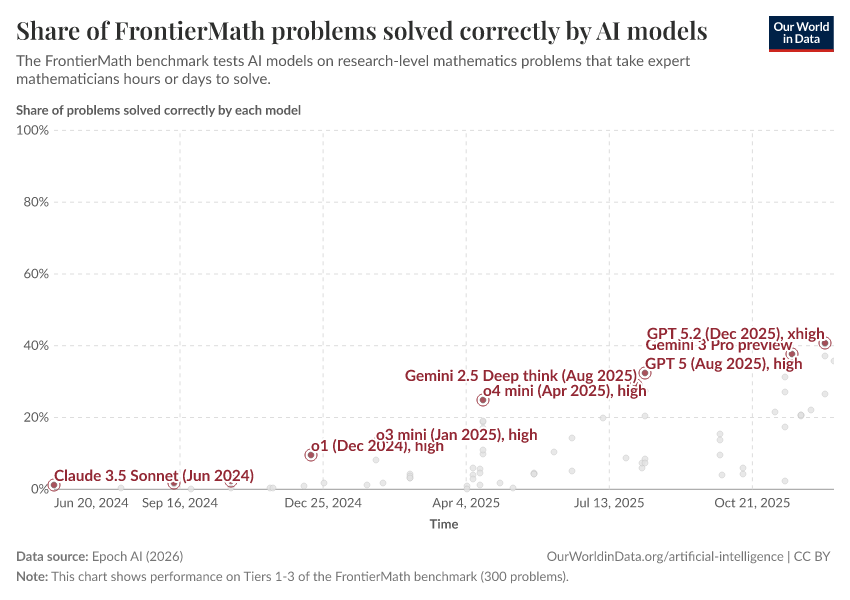

- This indicator shows the share of FrontierMath problems that AI models solve correctly, based on Epoch AI's evaluation.

- FrontierMath is a set of 350 original math problems written by experts, covering many areas of advanced mathematics. Many problems are difficult enough that human specialists might need hours or days to solve them.

- The benchmark has four difficulty tiers. This indicator shows accuracy on Tiers 1–3 (300 problems). Tier 4 contains 50 exceptionally difficult problems and is not included here.

- Scoring is all-or-nothing: models get 1 point for a correct final answer and 0 for anything else, with no partial credit. Models submit their answers as Python code and can use Python while working on problems. This means scores reflect math ability with access to computational tools, not just pen-and-paper reasoning.

- Only 12 problems are publicly available, mainly so researchers can inspect how evaluations work, not to report scores.

- FrontierMath was developed by Epoch AI with funding from OpenAI, whose GPT models are among those evaluated on this benchmark. OpenAI has exclusive access to a subset of the problems.

What you should know about this indicator

- This indicator shows the share of FrontierMath problems that AI models solve correctly, based on Epoch AI's evaluation.

- FrontierMath is a set of 350 original math problems written by experts, covering many areas of advanced mathematics. Many problems are difficult enough that human specialists might need hours or days to solve them.

- The benchmark has four difficulty tiers. This indicator shows accuracy on Tiers 1–3 (300 problems). Tier 4 contains 50 exceptionally difficult problems and is not included here.

- Scoring is all-or-nothing: models get 1 point for a correct final answer and 0 for anything else, with no partial credit. Models submit their answers as Python code and can use Python while working on problems. This means scores reflect math ability with access to computational tools, not just pen-and-paper reasoning.

- Only 12 problems are publicly available, mainly so researchers can inspect how evaluations work, not to report scores.

- FrontierMath was developed by Epoch AI with funding from OpenAI, whose GPT models are among those evaluated on this benchmark. OpenAI has exclusive access to a subset of the problems.

Sources and processing

This data is based on the following sources

How we process data at Our World in Data

All data and visualizations on Our World in Data rely on data sourced from one or several original data providers. Preparing this original data involves several processing steps. Depending on the data, this can include standardizing country names and world region definitions, converting units, calculating derived indicators such as per capita measures, as well as adding or adapting metadata such as the name or the description given to an indicator.

At the link below you can find a detailed description of the structure of our data pipeline, including links to all the code used to prepare data across Our World in Data.

Reuse this work

- All data produced by third-party providers and made available by Our World in Data are subject to the license terms from the original providers. Our work would not be possible without the data providers we rely on, so we ask you to always cite them appropriately (see below). This is crucial to allow data providers to continue doing their work, enhancing, maintaining and updating valuable data.

- All data, visualizations, and code produced by Our World in Data are completely open access under the Creative Commons BY license. You have the permission to use, distribute, and reproduce these in any medium, provided the source and authors are credited.

Citations

How to cite this page

To cite this page overall, including any descriptions, FAQs or explanations of the data authored by Our World in Data, please use the following citation:

“Data Page: Share of FrontierMath problems solved correctly by AI models”, part of the following publication: Charlie Giattino, Edouard Mathieu, Veronika Samborska, and Max Roser (2023) - “Artificial Intelligence”. Data adapted from Epoch AI. Retrieved from https://archive.ourworldindata.org/20260203-151107/grapher/ai-frontiermath-over-time.html [online resource] (archived on February 3, 2026).How to cite this data

In-line citationIf you have limited space (e.g. in data visualizations), you can use this abbreviated in-line citation:

Epoch AI (2026) – with minor processing by Our World in DataFull citation

Epoch AI (2026) – with minor processing by Our World in Data. “Share of FrontierMath problems solved correctly by AI models” [dataset]. Epoch AI, “Epoch AI Benchmark Data” [original data]. Retrieved February 25, 2026 from https://archive.ourworldindata.org/20260203-151107/grapher/ai-frontiermath-over-time.html (archived on February 3, 2026).