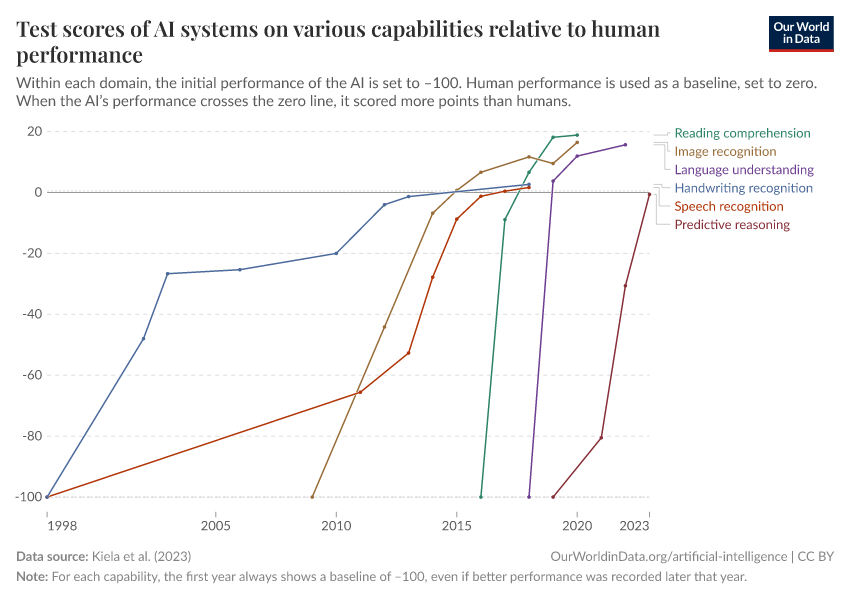

Test scores of AI systems on various capabilities relative to human performance

About this data

Related research and writing

About this data

Sources and processing

This data is based on the following sources

How we process data at Our World in Data

All data and visualizations on Our World in Data rely on data sourced from one or several original data providers. Preparing this original data involves several processing steps. Depending on the data, this can include standardizing country names and world region definitions, converting units, calculating derived indicators such as per capita measures, as well as adding or adapting metadata such as the name or the description given to an indicator.

At the link below you can find a detailed description of the structure of our data pipeline, including links to all the code used to prepare data across Our World in Data.

Notes on our processing step for this indicator

We mapped the benchmarks to their respective domains based on a review of each benchmark's primary focus and the specific capabilities it tests within AI systems:

- MNIST was mapped to "Handwriting recognition", as it tests AI systems' ability to recognize and classify handwritten digits, a fundamental task in the domain of digital image processing.

- GLUE was categorized under "Language understanding" due to its assessment of models across a variety of linguistic tasks, highlighting the general capabilities of AI in understanding human language.

- ImageNet was categorized as "Image recognition", focusing on the ability of AI systems to accurately identify and categorize images into predefined classes, showcasing the advancements in visual perception.

- SQuAD 1.1 and SQuAD 2.0 were distinguished as "Reading comprehension" and "Reading comprehension with unanswerable questions" respectively. While both benchmarks evaluate reading comprehension, SQuAD 2.0 adds an extra layer of complexity with the introduction of unanswerable questions, demanding deeper understanding and reasoning from AI models.

- BBH was aligned with "Complex reasoning", as it challenges AI with tasks that require not just logical reasoning but also creative thinking, simulating complex problem-solving scenarios.

- Switchboard was associated with "Speech recognition" due to its focus on transcribing and understanding human speech within a conversational context, evaluating AI's ability to process and respond to spoken language.

- MMLU was placed in "General knowledge tests", given its assessment across multiple disciplines and topics, requiring a broad and comprehensive understanding of language.

- HellaSwag was mapped to "Predictive reasoning" for its evaluation of AI's ability to predict logical continuations within given contexts, testing commonsense reasoning and understanding.

- HumanEval was categorized under "Code generation", focusing on AI's capability to understand programming languages and generate code that solves specific problems, highlighting skills in logical thinking and algorithmic problem-solving.

- SuperGLUE was designated as "Nuanced language interpretation" due to its advanced set of linguistic tasks that require deep understanding, reasoning, and interpretation of text, pushing the boundaries of what AI can comprehend.

- GSK8k was mapped to "Math problem-solving", as it tests AI on solving mathematical problems that involve reasoning and logical deduction, reflecting capabilities in numerical understanding and problem-solving.

Reuse this work

- All data produced by third-party providers and made available by Our World in Data are subject to the license terms from the original providers. Our work would not be possible without the data providers we rely on, so we ask you to always cite them appropriately (see below). This is crucial to allow data providers to continue doing their work, enhancing, maintaining and updating valuable data.

- All data, visualizations, and code produced by Our World in Data are completely open access under the Creative Commons BY license. You have the permission to use, distribute, and reproduce these in any medium, provided the source and authors are credited.

Citations

How to cite this page

To cite this page overall, including any descriptions, FAQs or explanations of the data authored by Our World in Data, please use the following citation:

“Data Page: Test scores of AI systems on various capabilities relative to human performance”, part of the following publication: Charlie Giattino, Edouard Mathieu, Veronika Samborska, and Max Roser (2023) - “Artificial Intelligence”. Data adapted from Kiela et al.. Retrieved from https://archive.ourworldindata.org/20250915-140209/grapher/test-scores-ai-capabilities-relative-human-performance.html [online resource] (archived on September 15, 2025).How to cite this data

In-line citationIf you have limited space (e.g. in data visualizations), you can use this abbreviated in-line citation:

Kiela et al. (2023) – with minor processing by Our World in DataFull citation

Kiela et al. (2023) – with minor processing by Our World in Data. “Test scores of AI systems on various capabilities relative to human performance” [dataset]. Kiela et al., “Dynabench: Rethinking Benchmarking in NLP” [original data]. Retrieved January 29, 2026 from https://archive.ourworldindata.org/20250915-140209/grapher/test-scores-ai-capabilities-relative-human-performance.html (archived on September 15, 2025).